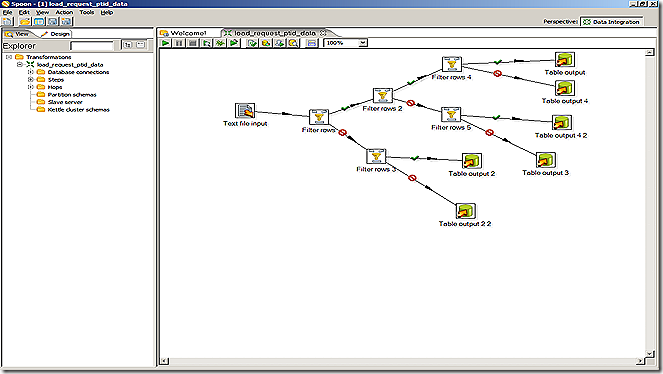

Yesterday I finally decided to try my Hands on Microsoft’s ETL tool, SSIS. I have worked with various ETL tools and this was the one I never used. It was part of a PoC (Proof of Concept) work which I had to. The objective was to read a text file and load it into a DB. This was kind of a Standard Dimension loading procedure. So I just had to extract the attributes from the Flat file, transform it and then load it into the DB.

Interesting part was the Creation of Surrogate Key or Primary Key. This is a really a good way to analyze capability of your ETL tool.

Informatica gives you many options like You can use “Sequence Generator Transformation” or You can use Global Variable or You can use Database Sequence (if your DB supports it).

Pentaho-Kettle ETL allows you to use Variables and “Database Sequence” to deal with this situation.

In SSIS you can do it with the Help of an “Execute SQL Task” and “Script Component” Transformation. It used a simple logic:

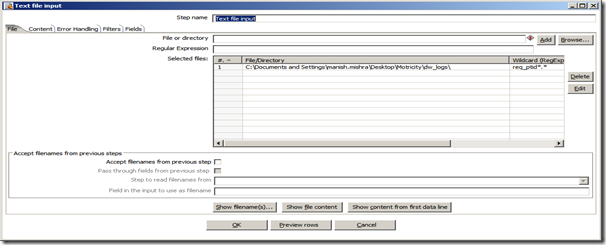

1. Pick data from a Source (flat file in my case).

2. Get Max value of the Surrogate key from Database Table.

3. Pass it to a Solution Variable.

4. Use the VB script, which will assign it to a Script Variable which will be incremented for Each row of data.

5. Concatenate the Resultset using Derived Column Transformation.

I googled for the Script (to increase the variable For Each Row) and found this Blog really useful.

There were few hiccups which actually were quite frustrating and time almost took me 2 Hours to finally solve them.

Firstly You should always match the datatypes of Variable and the Return type of Database Resultset.

Suppose your Query Return a SUM and the Variable is of type String you might get this Error:

[Execute SQL Task] Error: An error occurred while assigning a value to variable "Max_ID": "The type of the value being assigned to variable "User::Max_ID" differs from the current variable type. Variables may not change type during execution. Variable types are strict, except for variables of type Object. ".

Next thing to take care of is the Resultset mapping under Execute SQL Task editor. When you’re using ODBC data source, you should use Resultset Name as 1 otherwise you might get this Error:

“[Execute SQL Task] Error: An error occurred while assigning a value to variable "Max_Seq_ID": "Result column index 0 is not valid.".”

Then I tried changing it to Column name which I’ve used in the Query and then it gives error: (I’ve connected to a DB2 database with ODBC)

[Execute SQL Task] Error: An error occurred while assigning a value to variable "Max_Seq_ID": "Value does not fall within the expected range.".

If you change Resultset name to 1 with ODBC connection, you’ll get:

“[Execute SQL Task] Error: An error occurred while assigning a value to variable "Max_Seq_ID": "[IBM][CLI Driver] CLI0122E Program type out of range. SQLSTATE=HY003".”

Then I found out that we need to use “1” when using ODBC and use “0” with ADO.NET, as Resultset Name.

If you use Alias name from the Query in Resultset name with ADO.NET:

[Execute SQL Task] Error: An error occurred while assigning a value to variable "Max_Seq_ID": "Result binding by name "dasdas" is not supported for this connection type. ".

So many errors for one simple solution. I don’t want to Sound Critical but Informatica is Easy in regards to Transformation Usability but so is its Price.

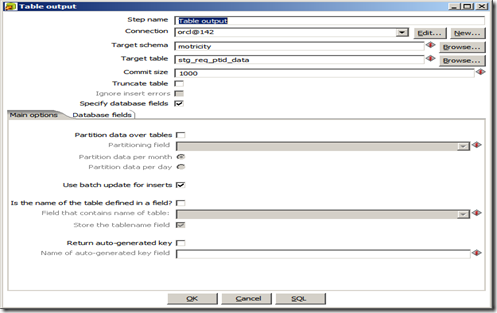

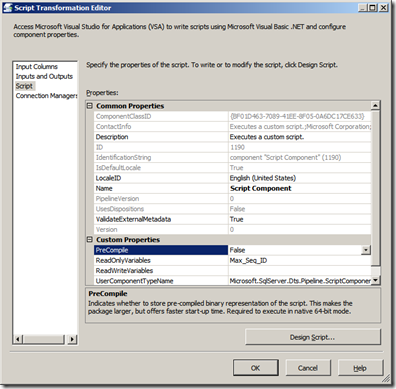

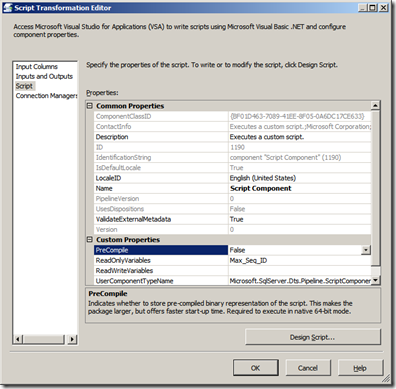

Anyways Once the Execute SQL task is configured properly, I added a Data Task to the Solution and then Added a Script Component with Output to a Derived Column and then to a Flat file (You can use a database table as well). In the Script Component Editor, Please do the following changes:

1. Add the Variable we used in Execute SQL task to ReadOnlyVariables.

2. Add a Output Column to “Inputs and Outputs” Section with Correct Datatype and Encoding.

3. Design the script with proper Type casting between Variables and Columns.

4. Set the Pre-Compile flag to False or you might get error:

“The script component is configured to pre-compile the script, but binary code is not found. Please visit the IDE in Script Component Editor by clicking Design Script button to cause binary code to be generated.”

I used an Derived Column Transformation to Add the Base column from the Flat file to the Generated Surrogate key from Script Component.

![clip_image001[4] clip_image001[4]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiFo8x49i7ijqLJw_XaVUZNJIk87iLPLWwdQAPVfN6xdSG5mu6S6RbREyXQxa5zN9rXkfIkCmT-n74uNZX_kXZDr5k66pKmrtVsRq5K7bGCHj3nWYhfuD9vh0b0EC1NdPl3y5M3YYmF-4M/?imgmax=800)