Couple of months back I needed to do a POC(Proof of Concept) for our BI group, with Open source technology. After some quick analysis we decided to use Pentaho BI solutions. Kettle, Pentaho’s ETL solution was the leading Open source ETL solution, plus we had an experienced resource for Kettle as well. Regarding BI, we had few Java resources who had BI exposure (with BIRT) so we decided to try something new and finalized Pentaho for BI as well.

ETL with Kettle

I have previously worked on Informatica for ETL so I had this mindset so as to compare every other ETL tool with Informatica. But I knew that Kettle is an Open source so I didn’t raise my expectation too high. I downloaded the software from Sourceforge although you can try the Pentaho paid version called Enterprise Edition as well, which comes with an 30 day trial. I’ll discuss more about the features of Enterprise edition later with BI discussion. I couldn’t find any difference in ETL part between Open source and Enterprise edition, except that they call the paid version “Pentaho Data Integration”.

So after all the setup I started exploring Kettle. Unzipped the software and searched for setup.exe or MSI file to start the Installation. But I was surprised to find just few scripts (bat files) at the root. Secondly you don’t have to download different versions for Linux as well. Similar set of scripts(in the sh format) are provided for Linux as well. I really liked that feature. So Plus 1 to the score for Kettle.

After that I searched that which script should I use to start the ETL tool. There were 3 batch files, Spoon, Kitchen and Pan, strange name haan….even Funny as well. Then I searched the Net and found this wiki. Its a really useful link for getting yourself familiarized with Kettle with some basic transformations examples as well. So now I knew that Which tool to use for What:

Kitchen: is the tool used to execute Jobs from a terminal window. Limited functionality as that of Informatica Workflow Manager.

Pan: allows you to execute Transformations from a terminal window.

Spoon: The graphical tool with which you design various Transformations and test them. Similar to Informatica Mapping Designer

Its like this, Create a Transformation with Spoon, test it save it in the repository. Use Pan to run a single

Transformation from command prompt. Kitchen is used to execute Job, which is created to execute a set of Transformations, which are Inter-dependent.

There are few more scripts present alongside the above mentioned like Encr, Carte.

Carte: Carte is a simple web server that allows you to execute transformations and jobs remotely. I really liked this utility. This is similar to what Informatica has Workflow Monitor or Web Admin Console, although not as robust or versatile as Workflow Monitor. It gives you a basic level of User Security as well.

Encr: This is another useful tool for enhanced security. You can encrypt your password as well. Carte allows you to store the passwords in an external file, which I found very strange (from the security perspective), but then I used Encr, which allows you to encrypt the password and then store in the file.

So after this brief about Kettle utilities, I started Spoon, but nothing happened, a command prompt appeared and vanished. Then I opened a command prompt and started Spoon.bat. Then it gave me a proper Error message that I need to set Java Home on my machine. Kettle requires the Sun Java Runtime Environment (JRE) version 1.5 or newer which you can get at Java or from your Local IT department. You need to set the environment variable JAVA_HOME correctly.

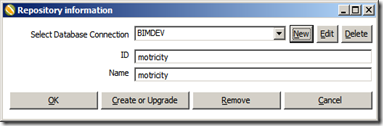

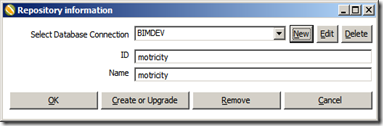

So after this I was able to start Spoon. Now you have to decided what kind of method you want to use to save Kettle Metadata i.e. Repository. Kettle allows you two ways for this, one is File based and the other as usual Database. Benefits are obvious like if you’re working in an distributed environment, use database solution otherwise File based. Secondly database solution allows user security as well.

Database Repository:

File based Repository:

In the next post I’ll discuss my experience with various Transformations which I used with Kettle.